Metaspace Architecture

A post in the Metaspace series:

We dive into the architecture of Metaspace. We describe the various layers and components and how they work together.

This will be mainly interesting to folks who either want to hack hotspot and the Metaspace or at least really understand where that memory goes and why we could not just use malloc.

Like most other non-trivial allocators, Metaspace is implemented in layers.

At the bottom, memory is allocated in large regions from the OS. At the middle, we carve those regions in not-so-large chunks and hand them over to class loaders.

At the top, the class loaders cut up those chunks to serve the caller code.

The bottom layer : The space list

At the bottom-most layer - at the most coarse granularity - memory for Metaspace is reserved and on demand committed from the OS via virtual memory calls like mmap(3). This happens in regions of 2MB size (on 64bit platforms).

These mapped regions are kept as nodes in a global linked list named VirtualSpaceList.

Each node manages a high water mark, separating the committed space from the still uncommitted space. New pages are committed on demand, when allocations reach the high-water-mark. A little margin is kept to avoid too frequent calls into the OS.

This goes on until the node is completely used up. Then, a new node is allocated and added to the list. The old node is getting “retired”1.

Memory is allocated from a node in chunks called MetaChunk. They are come in three sizes named specialized, small and medium - naming is historic - typically 1K/4K/64K in size 2:

The VirtualSpaceList and its nodes are global structures, whereas a Metachunk is owned by one class loader. So a single node in the VirtualSpaceList may and often does contain chunks from different class loaders:

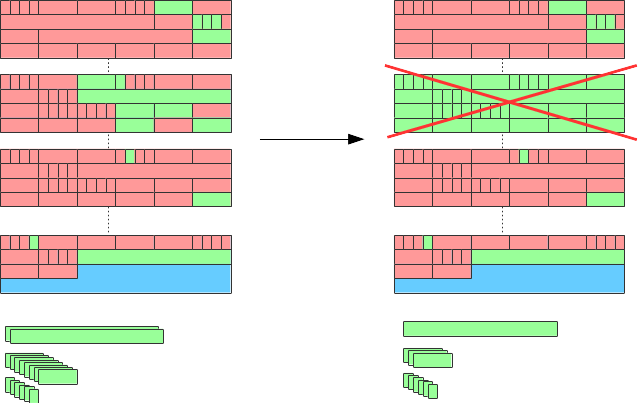

When a class loader and all its associated classes gets unloaded, the Metaspace used to hold its class metadata is released. All now free chunks are added to a global free list (the ChunkManager):

These chunks are reused: if another class loader starts loading classes and allocates Metaspace, it may be given one of the free chunks instead of allocating a new one:

The middle layer: Metachunk

A class loader requests memory from Metaspace for a piece of metadata (usually a small amount - some tens or hundreds of bytes), lets say 200 bytes. It will get a Metachunk instead - a piece of memory typically much larger than what was it requested.

Why? Because allocating memory directly from the global VirtualSpaceList is expensive. VirtualSpaceList is a global structure and needs locking. We do not want to do this too often, so a larger piece of memory is given to the loader - that Metachunk - which the loader will use to satisfy future allocations faster, concurrently to other loaders, without locking. Only if that chunk is used up the loader will bother the global VirtualSpaceList again.

How does the Metaspace allocator decide how large a chunk to hand to the loader? Well, it’s all guesswork:

A freshly started standard loader will get small 4K chunks, until an arbitrary threshold is reached (4), at which the Metaspace allocator visibly looses patience and starts giving the loader larger 64K chunks.

The bootstrap classloader is known as a loader which tends loads a lot of classes. So the allocator gives it a massive chunk, right from the start (4M). This is tunable via InitialBootClassLoaderMetaspaceSize.

Reflection class loaders (

jdk.internal.reflect.DelegatingClassLoader) and class loaders3 for anonymous classes are known to load only one class each. So they are given very small (1K) chunks from the start because the assumption is that they will stop needing Metaspace very soon and giving them anything more would be waste.

Note that this whole optimization - handing a loader more space than it presently needs under the assumption that it will need it very soon - is a bet toward future allocation behavior of that loader and may or may not be correct. They may stop loading the moment the allocator hands them a big chunk.

This is basically like feeding cats, or small children. The small ones you give a small amount of food on the plate, for the large ones you pile it on, and both cats and children may surprise you at any moment by dropping the spoon (the children, not the cats) and walking away, leaving half-eaten plates of memory behind. The penalty for guessing wrong is wasted memory. For tips of how to spot this please see Part 5.

The upper layer: Metablock

Within a Metachunk we have a second, class-loader-local allocator. It carves up the Metachunk into small allocation units. These units are called Metablock and are the actual units handed out to the caller (so, a Metablock houses one InstanceKlass, for instance).

This class-loader-local allocator can be primitive and hence fast:

The lifetime of class metadata is bound to the class loader, it will be released in bulk when the class loader dies. So the JVM does not need to care about freeing random Metablocks4. Unlike, say, a general purpose malloc(3) allocator would have to.

Lets examine a Metachunk:

When it is born, it just contains the header. Subsequent allocations are just allocated at the top. Again, the allocator does not have to be smart since it can rely on the whole metadata being bulk-freed.

Note the “unused” portion of the current chunk: since the chunk is owned by one class loader, that portion can only ever be used by the same loader. If the loader stops loading classes, that space is effectively wasted.

ClassloaderData and ClassLoaderMetaspace

The class loader keeps its native representation in a native structure called ClassLoaderData.

That structure has a reference to one ClassLoaderMetaspace structure which keeps a list of all Metachunks this loader has in use.

When the loader gets unloaded, the associated ClassLoaderData and its ClassLoaderMetaspace get deleted. This releases all chunks used by this class loader into the Metaspace freelist. It may or may not result in memory released to the OS if the conditions are right, see below.

Anonymous classes

ClassloaderData != ClassLoaderMetaspace

Note we kept saying “Metaspace memory is owned by its class loader” - but here we were lying a bit, that was a simplification. The picture gets more complicated with the addition of anonymous classes:

These are constructs which get generated for dynamic language support. When a loader loads an anonymous class, this class gets its own separate ClassLoaderData whose lifetime is coupled to that of the anonymous class instead of the housing class loader (so it - and its associated metadata - can be collected before the housing loader is collected). That means that a class loader has a primary ClassLoaderData for all normal loaded classes, and secondary ClassLoaderData structures for each anonymous class.

The intent of this separation is, among other things, to not unnecessarily extend the lifespan of Metaspace allocations for things like Lambdas and Method handles.

So, again: when is memory returned to the OS?

Lets look again at when memory is returned to the OS. We now can answer this more detailed than at the end of Part 1:

When all chunks within one VirtualSpaceListNode happen to be free, that node itself is removed. The node is removed from the VirtualSpaceList. Its free chunks are removed from the Metaspace freelist. The node is unmapped and its memory returned to the OS. The node is “purged”.

For all chunks in a node to be free, all class loaders owning those chunks must have died.

Whether or not this is probable depends highly on fragmentation:

A node is 2MB in size; chunks differ in size from 1K-64K; a usual load is ~150 - 200 chunks per node. If those chunks all have been allocated by a single class loader, collecting that loader will free the node and release its memory to the OS.

But if instead these chunks are owned by different class loaders with different life spans, nothing will be freed. This may be the case when we deal with many small class loaders (e.g. loaders for anonymous classes or Reflection delegators).

Also, note that part of Metaspace (Compressed Class Space) will never be released back to the OS.

TL;DR

- Memory is reserved from the OS in 2MB-sized regions and kept in a global linked list. These regions are committed on demand.

- These regions are carved into chunks, which are handed to class loaders. A chunk belongs to one class loader.

- The chunk is further carved into tiny allocations, called blocks. These are the allocation units handed out to callers.

- When a loader dies, the chunks it owns are added to a global free list and reused. Part of the memory may be released to the OS, but that depends highly on fragmentation and luck.

- The remaining space of retired nodes is not lost, but carved up in chunks and added to the global freelist. [return]

- Size differs for 32 bit VMs, and whether the chunk is located in the CompressedClassSpace or the Non-Class Metaspace. [return]

- Not real class loaders, but that distinction is not important for now [return]

- There are rare exceptions to this where allocated Metaspace can be released before its class is unloaded. For instance, if a class is redefined, parts of the old metadata are not needed anymore. Or, if an error occurs during class loading, we already may have allocated metadata for this class which now is stranded. In all these cases, the Metablock is added to a small free-block-dictionary and - maybe - reused for follow-up allocations from that same loader. The blocks are called to be “deallocated”. [return]